Artificial Intelligence (AI) and Machine Learning (ML) continue to be a much talked about topic since the release of ChatGPT last year but also well before that to a lesser extent stretching all the way back to the 1950s. The explosive growth in the use of Large Language Model (LLMs) over the past 12 months to generate text and imagery has spurred ample thought about both the positive and negative impacts of AI and ML.

Introduction

Some have called for a pause on the development of AI and ML systems out of fear of reaching AI singularity where use of the technology reaches damaging and irreversible proportions. What is certain is that like any technology, AI and ML can have enormous benefits when deployed responsibly, i.e, in a way the maximises the benefits whilst mitigating risks associated with poor, rushed or outright malicious implementations.

And so the field of Responsible AI has evolved to address these concerns and most importantly to maximise the benefits for organizations and for society as a whole.

Read more on our Expert Guide to Responsible AI

There are existing sources of guidance on the responsible adoption of AI & ML including principles. Principles aren't new of course but never before perhaps have they been as important as we scale up the automation of business and government rules with less manual handling by humans but hopefully more reliable supervision. The OECD offers one such set of principles covered in the expert guide referenced.

There are also standards including the NIST AI Risk Management Framework (RMF) and the nascent ISO/IEC 42001, both folding AI and ML specific considerations into frameworks already used to address requirements related to cyber security, information security, privacy and in the case of ISO other risk domains and management systems such as quality, safety, environment and asset management.

What's available in the 6clicks content library

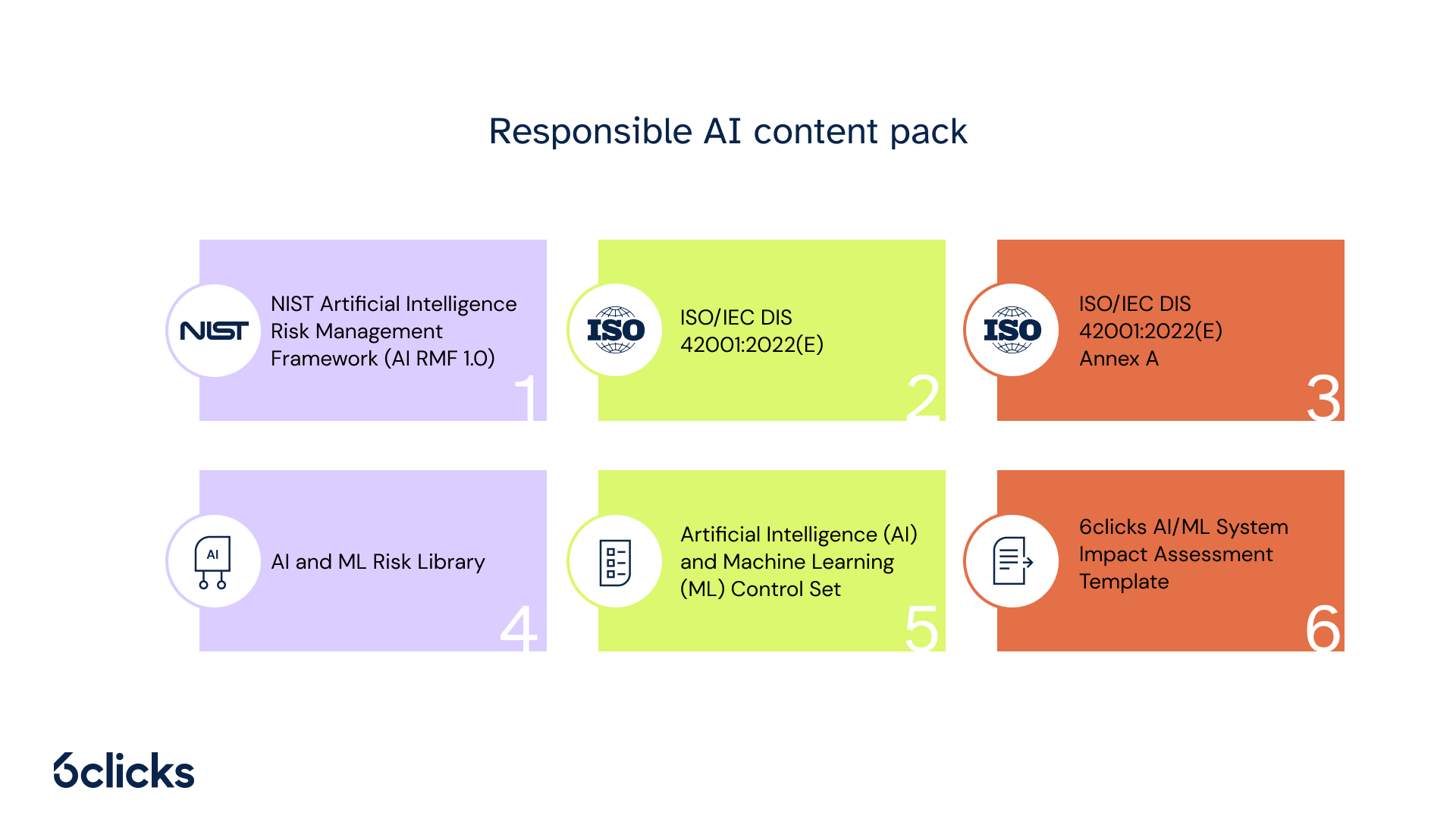

6clicks has curated a content pack that includes the relevant standards and much more. Specifically:

- 6clicks Responsible AI Playbook

- NIST Artificial Intelligence Risk Management Framework (AI RMF 1.0)

- ISO/IEC DIS 42001:2022(E)

- ISO/IEC DIS 42001:2022(E) Annex A

- AI and ML Risk Library

- Artificial Intelligence (AI) and Machine Learning (ML) Control Set

- 6clicks AI/ML System Impact Assessment Template

This content pack helps organizations get started with their Responsible AI programs in the 6clicks platform quickly and easily, including the relevant standards, a risk library, a control set and an assessment template.

View the Responsible AI content pack in the 6clicks Marketplace

The 6clicks platform also includes all the Governance, Risk and Compliance --GRC-- functionality necessary to help you work with the Responsible AI content. Specifically:

- The risk module to perform an initial review of AI & ML risks for relevance and significance to your organisation

- The control module to build and refine controls and responsibilities aimed at mitigating relevant AI & ML risks and addressing compliance requirements

- The audit and assessment module to perform system impact assessment in accordance with the NIST and ISO standards

Not to mention many other modules and platform features that will help you on your Responsible AI journey including Hailey AI for mapping between standards or your internal controls and standards -- and my personal favourite --the Trust Portal-- where you can showcase the results of any audits & assessments along with supporting documents such as policies and certificates.

Find out more about our Responsible AI solution

Finally, as our customers build out Responsible AI solutions in 6clicks, we are planning for our partner community to help them. Organizations may need help reviewing AI & ML risks, refinding AI & ML specific controls and performs audits & assessments. If you have advisors and consultants with AI & ML skills and expertise to share, then please consider becoming a 6clicks partner.

Written by Andrew Robinson

Andrew started his career in the startup world and went on to perform in cyber and information security advisory roles for the Australian Federal Government and several Victorian Government entities. Andrew has a Masters in Policing, Intelligence and Counter-Terrorism (PICT) specialising in Cyber Security and holds IRAP, ISO 27001 LA, CISSP, CISM and SCF certifications.