Contents

What are the potential risks of using AI?

The realm of artificial intelligence stretches across various fields and disciplines. Branches of AI include machine learning, robotics, and smart assistants among others. With its widespread use, it is the responsibility of developers, organizations, and policymakers to consider the potential risks and dangers that AI poses to both individuals and organizations. Here are some of them:

Privacy concerns and security threats

One of the primary applications of AI involves the collection and processing of vast amounts of personal or confidential data. This gives rise to concerns related to data privacy and security and how data can be protected against unauthorized access, data breaches, and misuse of information.

Bias and manipulation

AI recognizes patterns and generates predictions by learning from data, and the use of biased training data or algorithms can result in skewed and unfair outcomes. Meanwhile, deepfakes are a product of digital manipulation using AI that constitute threats of misinformation, identity theft, and reputational damage.

Ethical dilemmas

Since AI systems operate independently and make autonomous decisions, the question of accountability becomes a challenge when errors or unintended consequences occur. Establishing a clear line of responsibility for AI systems requires careful consideration of legal, ethical, and regulatory frameworks.

To mitigate these risks, organizations are urged to incorporate responsible AI in their decision-making.

What is responsible AI?

Responsible AI refers to the ethical and trustworthy development and deployment of AI systems. Microsoft has identified the key principles of responsible AI are founded on fairness, inclusiveness, reliability & safety, transparency, privacy & security, and accountability. Organizations integrating AI into their products and services must adopt responsible AI practices, such as ensuring that AI systems are functioning as intended and that the purposes of these systems are clearly defined.

By prioritizing responsible AI, organizations can maintain the safety of employees and end-users, promote a positive brand reputation, and foster customer trust. Regulatory frameworks like the NIST’s AI Risk Management Framework are designed to enable organizations to successfully implement responsible AI and enhance their risk management strategies.

What is the NIST AI Risk Management Framework?

The AI Risk Management Framework by the National Institute of Standards and Technology contains methods that aim to facilitate the responsible design, development, use, and evaluation of AI products, services, and systems and increase their trustworthiness.

Organizations can voluntarily use it as a resource to guide their use, development, or deployment of AI systems and help manage and mitigate the risks that may emerge from operating them. The NIST AI RMF not only examines the negative aspects of AI but also sheds light on the positive effects of the technology.

Part 1: Foundational information

The Framework is divided into 2 parts. The first part discusses foundational information, which involves the kinds of harms that may arise from the use of AI, the challenges in managing the risks of these harms, and the characteristics of trustworthy AI.

The AI RMF 1.0 has categorized the potential harms of using AI into three types. The NIST has defined harm as the negative impact of AI that can be experienced by individuals, groups, communities, organizations, and so on.

- Harm to people – Covers harm to the civil liberties, rights, physical or psychological safety, or economic opportunity of individuals, groups, and societies.

- Harm to an organization – Covers harm to an organization’s business operations, harm to its reputation, and harm in the form of security breaches or monetary loss.

- Harm to an ecosystem – Covers harm to interconnected and interdependent elements and resources, harm to the global financial system, supply chain, or interrelated systems, and harm to natural resources, the environment, and the planet.

In addition, the AI RMF 1.0 has determined the different challenges for AI risk management:

Risk measurement

It is difficult to measure the quantitative and qualitative value of AI risks that are not well-defined and understood. Other challenges in measuring AI risks include:

- Risks related to third-party software, hardware, data, and their use

- Emergent risks and how to identify, track, and measure them

- The lack of reliable metrics and consensus on robust and verifiable measurement methods for risk, trustworthiness, and applicability to different AI use cases

- Risks arising at different stages of the AI lifecycle

- Risks in real-world settings, which may be different from risks in a controlled environment

- The inscrutability of AI systems, which can be a result of their unexplainable or uninterpretable nature, lack of transparency or documentation, and inherent uncertainties, and

- Systematizing baseline metrics for comparison when augmenting or replicating human activity

Risk tolerance

The Framework defines risk tolerance as the organization’s readiness to handle risks to achieve its objectives. Risk tolerance can be influenced by legal or regulatory requirements, is application and use-case-specific, and is likely to change over time as AI systems, policies, and norms evolve.

Risk prioritization

Risks that an organization deems to be the highest call for the most urgent prioritization. Risk prioritization may differ for AI systems that are designed or deployed to directly interact with humans compared to AI systems that are not.

Organizational integration and management of risk

AI risk management should be integrated into broader enterprise risk management strategies and processes. Organizations need to establish and maintain appropriate accountability mechanisms and roles and responsibilities to make way for effective risk management.

Lastly, Part 1 of the AI RMF 1.0 characterizes trustworthy AI systems as:

- Valid and reliable

- Safe, secure, and resilient

- Accountable and transparent

- Explainable and interpretable

- Privacy-enhanced

- Fair with harmful bias managed

Part 2: Core and profiles

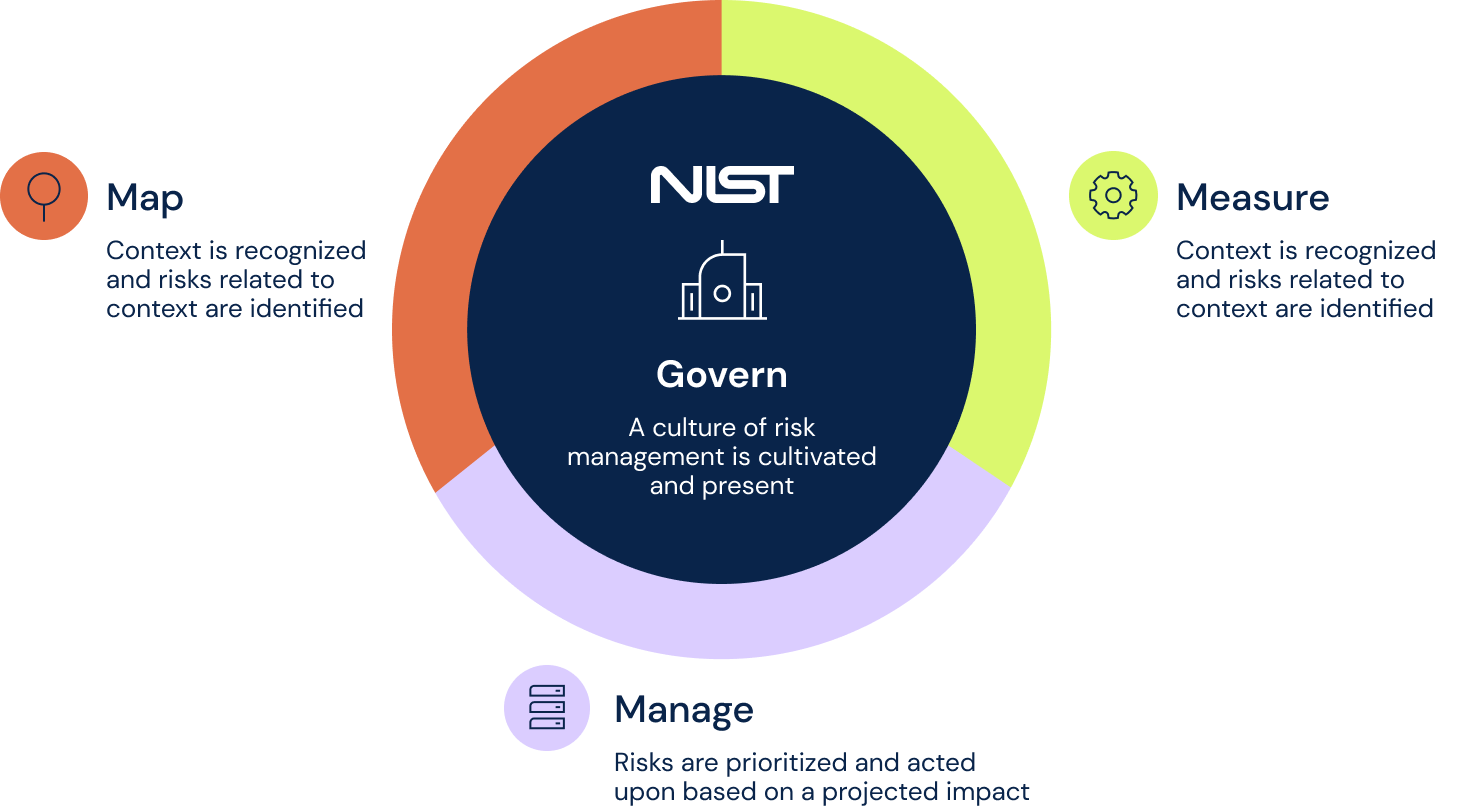

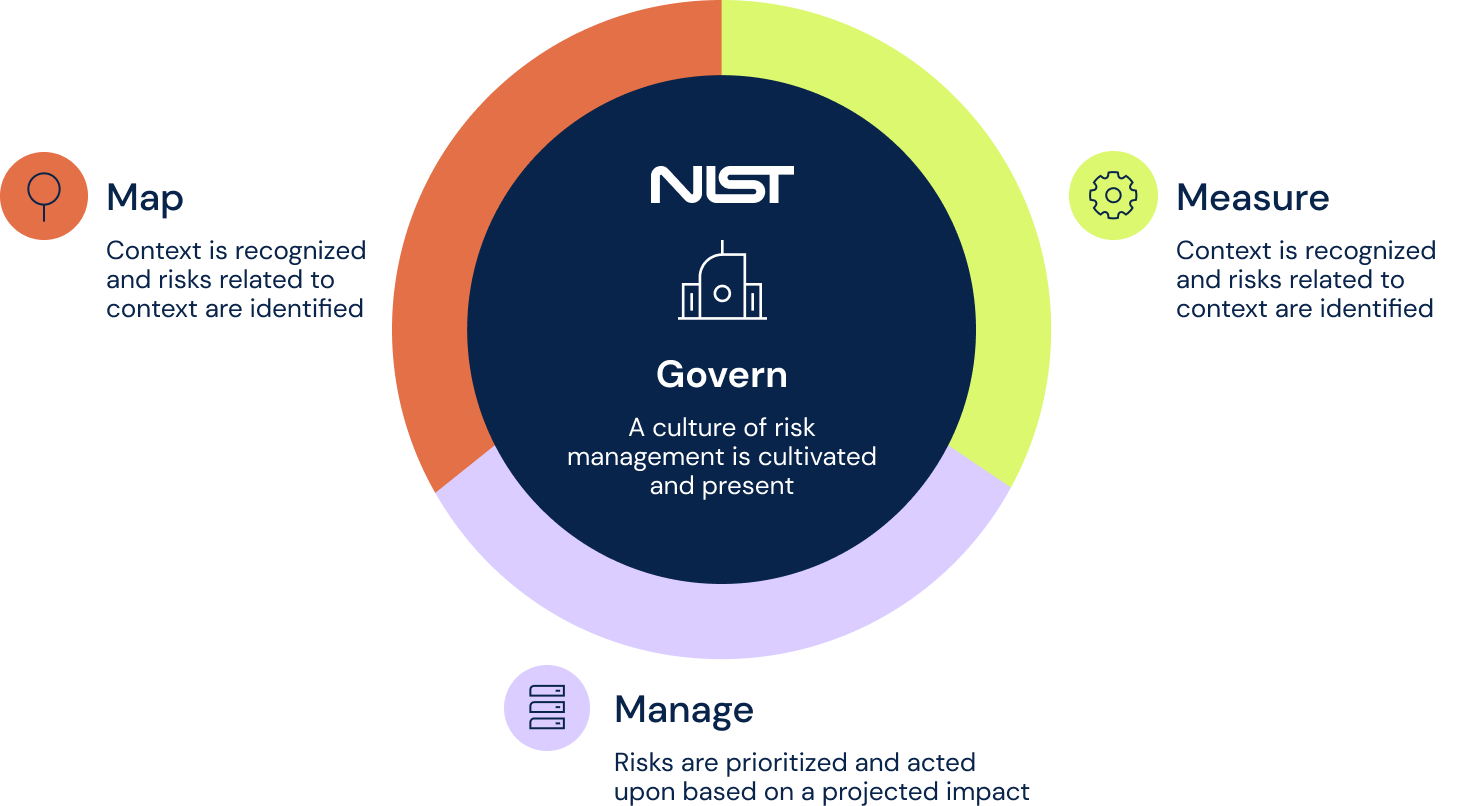

The second part comprises the Core of the Framework and outlines the four functions: GOVERN, MAP, MEASURE, and MANAGE. The AI RMF 1.0 Core provides outcomes and actions that allow organizations to plan activities that will help them manage AI risks and responsibly develop trustworthy AI systems. Just like the Core model, the Framework advocates that risk management should be continuously performed throughout the AI system lifecycle.

Govern

The GOVERN function of the AI RMF 1.0:

- Promotes and implements a culture of risk management within organizations that design, develop, deploy, evaluate, or acquire AI systems

- Delineates processes, documents, and organizational schemes that anticipate, identify, and manage the risks a system can pose to users and others across society, as well as the procedures to achieve those outcomes

- Includes processes for assessing potential impacts

- Provides a structure by which AI risk management functions can align with organizational principles, policies, and strategic priorities

- Connects technical aspects of AI system design and development to organizational values and principles

- Addresses the full product lifecycle and associated processes, including legal and other issues concerning the use of third-party software or hardware systems and data

GOVERN is a cross-cutting function throughout the whole AI risk management process and enables the other functions of the Framework. The GOVERN function contains additional categories and sub-categories which can be summarized into:

- Effective implementation of established and transparent policies, processes, procedures, and practices related to the mapping, measuring, and managing of AI risks across the organization

- Established accountability structures allowing appropriate teams to be empowered, responsible, and trained for mapping, measuring, and managing AI risks

- Prioritization of workforce diversity, equity, inclusion, and accessibility processes when mapping, measuring, and managing risks throughout the AI lifecycle

- Commitment of organizational teams to a culture that considers and communicates AI risk

- Establishment of processes for robust engagement with relevant organizations and individuals

- Establishment of policies and procedures for addressing AI risks and benefits arising from third-party software and data and other supply chain issues

Practices for governing AI risks are outlined in the NIST AI RMF Playbook.

Map

The MAP function of the AI RMF 1.0:

- Improves an organization’s capacity for understanding contexts

- Tests an organization’s assumptions about the context of use

- Enables an organization to recognize when systems are not functional within or out of their intended context

- Highlights the positive and beneficial uses of an organization’s existing AI systems

- Improves understanding of limitations in AI processes

- Determines constraints in real-world applications that may lead to negative impacts

- Identifies known and foreseeable negative impacts related to the intended use of AI systems

- Anticipates risks of the use of AI systems beyond intended use

The MAP function helps establish context to allow organizations to accurately frame AI risks and enhance their ability to identify them as well as broader contributing factors. What organizations learn upon executing the MAP function can contribute to negative risk prevention and help inform their decisions, such as whether the use, development, or deployment of an AI system is needed.

Outcomes of the MAP function will then be the basis for the next functions. If the organization decides to proceed with using an AI system, the MEASURE and MANAGE functions must be utilized along with policies and procedures under the GOVERN function to guide their AI risk management efforts.

The categories of the MAP function can be outlined as follows:

- Establishing and understanding context

- Categorization of AI systems

- Understanding AI capabilities, targeted usage, goals, and expected benefits and costs compared with appropriate benchmarks

- Mapping of risks and benefits for all components of the AI system including third-party software and data

- Characterization of impacts on individuals, groups, communities, organizations, and society

Practices for mapping AI risks are outlined in the NIST AI RMF Playbook.

Measure

The MEASURE function of the AI RMF 1.0 employs tools and methods for analyzing, assessing, benchmarking, and monitoring AI risk and related impacts by using the knowledge developed from the MAP function. Measuring AI risks includes documenting aspects of a system’s functionality and trustworthiness and tracking metrics for trustworthy characteristics, social impact, and human-AI configurations.

Measurement processes patterned after the MEASURE function must include rigorous software testing and performance assessment methodologies with measures of uncertainty, comparisons to performance benchmarks, and formalized reporting and documentation of results.

After completing the MEASURE function, objective, repeatable, and scalable test, evaluation, verification, and validation (TEVV) processes must be established, followed, and documented. They must include metrics and measurement methods that adhere to scientific, legal, and ethical standards and can be performed transparently. Measurement outcomes are then utilized in the MANAGE function to assist risk monitoring and response efforts.

The MEASURE function also has categories and sub-categories. Categories include:

- Identification and application of appropriate methods and metrics

- Evaluation of systems for trustworthy characteristics

- Setting up of mechanisms for tracking identified AI risks over time

- Gathering and assessment of feedback about efficacy of measurement

Practices for measuring AI risks are outlined in the NIST AI RMF Playbook.

Manage

Finally, the MANAGE function of the AI RMF 1.0 involves the allocation of resources to treat mapped and measured risks as defined by the GOVERN function. Information and systematic documentation practices established under the GOVERN function and carried out under the MAP and MEASURE functions are used in the MANAGE function to reduce the probability of system failures and negative impacts, leading to effective AI risk management.

Once an organization completes the MANAGE function, it will now have well-defined plans for prioritizing, regular monitoring, and improvement of risk management. It will also have an enhanced capacity to manage the risks of deployed AI systems and allocate risk management resources efficiently.

Categories of the MANAGE function boil down to:

- Prioritization, response to, and management of AI risks based on assessments and other analytical output from the MAP and MEASURE functions

- Planning, preparation, implementation, documentation, and informing of strategies to maximize AI benefits and minimize negative impacts

- Managing AI risks and benefits from third-party entities

- Documentation and regular monitoring of risk treatments which refer to response, recovery, and communication plans for the identified and measured AI risks

Practices for managing AI risks are outlined in the NIST AI RMF Playbook.

AI RMF Profiles

The AI RMF 1.0 identifies three types of Profiles for AI risks. These Profiles assist organizations in deciding how to manage AI risks in a way that is aligned with their goals, considers regulatory requirements and best practices, and reflects risk management priorities:

- Use-case Profiles – Refers to implementations of the AI RMF for a specific setting or application (e.g., Customer Service Profile, Business Development Profile).

- Temporal Profiles

-

- Current Profile – Indicates how AI risks are currently being managed

- Target Profile – Covers outcomes needed to achieve the desired AI risk management goals. Can be compared with an organization’s Current Profile to identify gaps that need to be addressed to meet AI risk management objectives.

- Cross-sectoral Profiles – Refers to AI risks that apply across various sectors or use cases or AI risk management activities that can be implemented across sectors or use cases.

How can organizations use the NIST AI Risk Management Framework?

Now that you’re familiar with the NIST AI RMF, you can then incorporate its practices and processes into your risk management strategy.

Organizations can start by identifying the objectives, data inputs, outcomes, and possible risks of their AI systems and conduct a thorough risk assessment of each to reveal potential threats, vulnerabilities, and effects.

Top-priority risks also need to be determined by classifying AI systems into risk levels. Risk mitigation procedures must then be developed and enhanced with regular testing and validation of AI systems. Once you have built a solid risk management process, it must be followed by comprehensive documentation and continuous monitoring and communicated to all relevant stakeholders and business units.

The NIST AI Risk Management Framework is just one of the dozens of regulatory frameworks and authority documents that you can utilize from 6clicks’ Content Library. Take better control of your risk management process by leveraging the NIST AI RMF along with 6clicks’ Risk Management solution.

6clicks’ GRC software equips businesses and advisors with powerful capabilities such as Enterprise and Operational Risk Management, Audit & Assessment, Issues & Incident Management, and Policy & Control Management through an all-in-one, multi-tenanted platform.

With 6clicks’ Risk Management solution, you can automate risk assessment against your desired framework, make use of our extensive risk libraries and other turnkey content, build custom workflows and structured risk registers, and maximize 6clicks’ Reporting & Analytics capability to generate comprehensive reports and deliver critical insights in an instant.

Effectively manage AI risks with an integrated risk management solution

Harness the capabilities of 6clicks’ GRC software to streamline the entire risk management process, from identification and assessment to mitigation and monitoring.