Using artificial intelligence has propelled global economic growth and enriched different aspects of our lives. However, its ever-evolving nature and continued prevalence in various industries constitute the need for robust AI governance structures.

Various organizations and policymakers have established guidelines for the responsible use of AI to aid individuals and organizations in managing its potential risks and negative effects. Responsible AI refers to the ethical, transparent, and trustworthy use, development, and deployment of AI systems.

The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) have formulated the ISO/IEC 42001 to promote responsible AI practices among organizations utilizing or providing AI-based products and services. Let’s examine its scope, benefits, and objectives and how it can be used together with 6clicks’ Risk Management solution.

What is the ISO/IEC 42001?

The ISO/IEC 42001 is the world’s first AI management system standard that provides organizations with directives on how to establish, implement, maintain, and continuously improve an Artificial Intelligence Management System (AIMS).

As defined by the ISO, an artificial intelligence management system is comprised of the different components of an organization working together to set the policies, procedures, and objectives for the development, provision, or use of AI systems.

The ISO/IEC 42001 standard establishes a structured framework for the responsible use of AI. It offers organizations an integrated approach to AI risk management from assessment to treatment and guides them in developing policies, defining objectives, and implementing processes required for an AI management system.

What are the benefits and features of the ISO/IEC 42001?

The ISO/IEC 42001 standard covers broad applications of AI and is designed to be used by organizations of varying types and sizes across diverse industries. Organizations can utilize the standard to:

- Govern the use, development, and provision of AI systems

- Guide the overall management of AI-specific and related risks

- Maintain brand reputation and enhance customer trust in AI applications

- Improve operational efficiency and reduce costs, and

- Explore AI opportunities and foster innovation within a structured framework

Here are the key features of ISO 42001:

Risk management as a cycle

The standard treats AI risk management as a cycle that must be repeatedly implemented, maintained, and improved. It also seeks to support continuous learning and adaptability by helping organizations understand dynamic and inscrutable AI systems.

Responsible AI implementation

The standard addresses ethical and social concerns associated with AI technologies and emphasizes the need for traceability, transparency, and reliability throughout the entire AI system lifecycle, from introduction to deployment.

Mandatory requirements and controls

The ISO/IEC 42001 features mandatory clauses and an Annex delineating controls for the use of AI systems, which includes AI policy formulation, impact assessment processes, information transparency, roles and accountability, lifecycle objectives and measures, and more.

Compliance and certification

The standard ensures compliance with legal and regulatory standards and allows organizations to obtain an ISO certification after a successful audit. This involves demonstrating the qualities of a responsible AI system such as security, safety, robustness, and fairness.

Incorporation within organizational structures

Implementing the ISO/IEC 42001 requires a multidisciplinary approach involving various departments in an organization to seamlessly integrate AI management into existing organizational processes and facilitate informed decision-making at the organizational level.

Integration with other standards

ISO 42001 can be integrated with other Management System Standards (MSS) such as the ISO 27001 (information security management), ISO 27701 (privacy information management), and ISO 9001 (quality management) and used in conjunction with other management disciplines.

The ISO/IEC 42001 standard also aligns with the principles of other global legislation for responsible AI, such as the European Union’s Artificial Intelligence Act and the US National Artificial Intelligence Initiative Act.

What is the ISO/IEC 42001 AI management system framework?

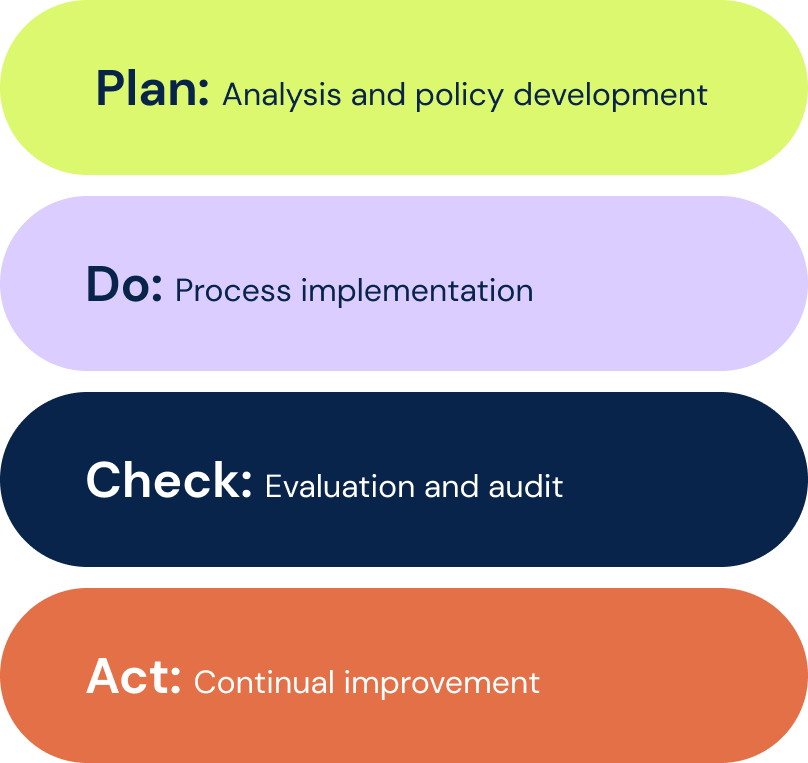

The ISO/IEC 42001 standard employs the Plan-Do-Check-Act (PDCA) cycle within the context of AI management. This enables organizations to follow a systematic and iterative method in executing, controlling, and continually improving an AI management system.

Plan: Analysis and policy development

Planning is the first stage of the PDCA cycle. Within the scope of ISO 42001, this involves establishing organizational context and identifying key stakeholders. A clear understanding of the needs as well as the internal and external factors that can impact the organization and its objectives must be developed during this phase. Interested parties and stakeholders as well as their needs and expectations must also be defined.

The planning stage also entails the formulation of an AI policy based on the comprehensive analysis of the organization’s objectives, risks, and opportunities related to AI. This AI policy will then serve as a manual for establishing responsible AI practices within the organization.

Do: Process implementation

Once the AI policy is in place, it’s time to put plans into action. The second phase of the cycle within the ISO/IEC 42001 framework is dedicated to the implementation of the AI management system policies and procedures and consists of operational planning and establishing controls.

In this stage, AI risk assessment and treatment processes are cemented into the organization's operational framework. Robust procedures are implemented to systematically identify, evaluate, and mitigate potential risks associated with AI applications. An AI system impact assessment process must also be established to measure potential effects on individuals, organizations, or society.

Check: Evaluation and audit

The Check phase in the ISO 42001 framework encompasses the monitoring, measurement, and evaluation of the performance and effectiveness of the AI management system. The organization must conduct internal audits to assess the extent to which AI management criteria are met. This stage also ensures that AI practices align with the organization’s policies and objectives and relevant regulations and requirements.

Act: Continual improvement

The last stage in the framework involves constant improvement and corrective action. This is where the organization must identify areas for improvement and execute corrective measures to address nonconformities and prevent their recurrence. By adopting a proactive and adaptive approach, organizations can maintain the relevance and effectiveness of their AI management system and ensure it continuously evolves alongside the dynamic AI landscape.

What are the mandatory requirements of the ISO/IEC 42001?

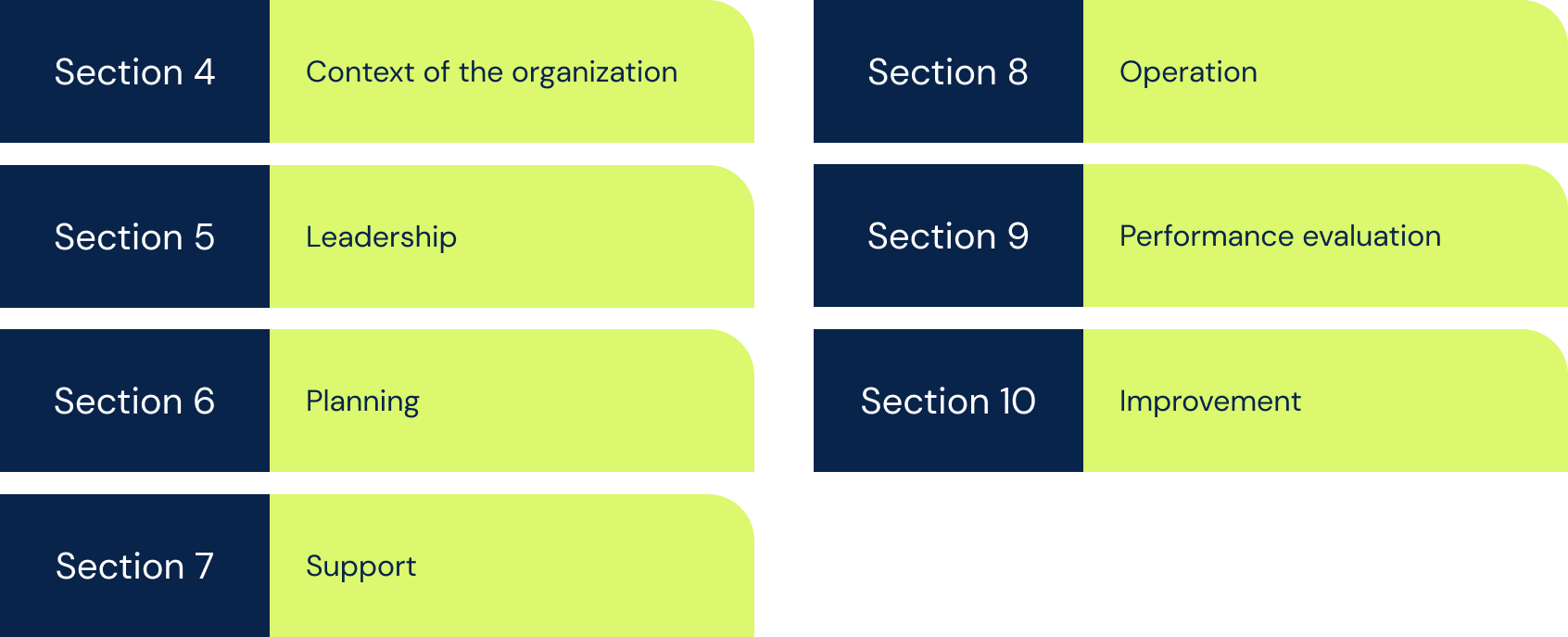

In sections 4 to 10, the standard details a set of mandatory clauses that define the requirements for an organization’s AI management system. They are summarized below:

Section 4: Context of the organization

Organizations must determine their organizational setting or environment, stakeholders, and the AI management system's scope.

Section 5: Leadership

The objectives of the AI system must be documented, monitored, and communicated to management. Leadership must take charge of planning, delegation of responsibilities, resource allocation, and evaluation to support the AI management system.

Section 6: Planning

An AI policy, which includes the perceived AI risks and opportunities of the organization, must be developed and documented. The AI risk assessment process must be based on predefined criteria, consistent with the organization’s AI policy and objectives, and comparable with repeated assessments. It must also incorporate AI system impact assessment and prioritize treatment.

Section 7: Support

Comprehensive training for the workforce involved in the AI management system must be enforced. Employees must have a solid grasp of the technical facets, ethical and social considerations, biases, and risks surrounding AI to effectively support AI management processes.

Section 8: Operation

Organizations must recognize that operational planning and control constitute the foundation of a successful AI management system. The standard mandates that organizations must define necessary controls and specific activities for AI risk treatment and management.

Section 9: Performance evaluation

The standard defines clear provisions for monitoring, measuring, analyzing, and evaluating an AI management system. Organizations must monitor whether AI systems are functioning as intended and perform a quantitative and qualitative measurement of their performance. They must also derive insights into both the areas where the AI system succeeds and the areas where improvements are needed. Lastly, organizations must conduct internal audits to ensure compliance with the standard.

Section 10: Improvement

ISO 42001 places emphasis on continual improvement and fosters a culture of ongoing enhancement. It requires organizations to set recurring activities to enhance AI-related processes, objectives, and outcomes. AI risks and opportunities must be prioritized efficiently and improvement efforts must be focused on those with the most significant impact. Organizations are also prompted to stay on top of advancements in AI, shifts in regulatory frameworks, and emerging ethical considerations.

Annex A: Controls

Under Annex A, the ISO/IEC 42001 standard also provides a list of controls that spans various aspects of the use, development, and deployment of AI systems. This helps organizations meet AI objectives and address concerns identified during the risk assessment process:

- AI policy formulation – An AI policy must align with other organizational policies and undergo regular reviews to maintain effectiveness

- Impact assessment processes – Comprise analysis of the potential impact of AI systems on individuals, organizations, and society

- Information transparency – Tackles the dissemination of AI system information to interested parties in the form of external reporting, communication of incidents, and more

- Internal organization – Covers roles and responsibilities, reporting of concerns, and other measures for promoting accountability

- Lifecycle objectives and measures – Outlines processes throughout the AI system lifecycle including the definition of system requirements, development, operation, and monitoring

- Use of AI systems – Covers the responsible and intended use of AI systems in accordance with the organization’s AI objectives

- Resource documentation – Encompasses resources for AI systems such as data, tooling, system and computing, and human resources

- Data management – Involves data quality, provenance, and preparation for AI systems

- Third-party and customer relations – Addresses third-party relationships with suppliers, customers, and more

How can organizations use the ISO/IEC 42001 with 6clicks’ GRC software?

The ISO 42001 standard is available on the 6clicks Content Library for users to freely download and utilize for their risk and compliance needs. 6clicks’ GRC software equips organizations, advisors, and managed service providers with a range of capabilities such as Risk Management, Compliance Management, Audit & Assessment, Controls & Policies, and integrated content.

6clicks’ AI engine, Hailey, can map standards like the ISO/IEC 42001 as well as other frameworks and regulations all at once and run a single audit and assessment against all of them to help organizations determine their compliance with each.

Organizations can also leverage other 6clicks content such as control sets, obligation sets, risk libraries, and audit and assessment templates along with ISO 42001 for various risk and compliance activities. With 6clicks’ Reporting and Analytics capability, you can gain powerful insights into your risk and compliance management processes which can help drive better decisions for your organization.

Key takeaways

- The ISO/IEC 42001 promotes responsible AI governance in organizations and helps them effectively address AI risks and opportunities

- ISO 42001 adopts the Plan-Do-Check-Act methodology to guide organizations in developing, monitoring, maintaining, and continually improving their AI management systems

- Organizations can take advantage of the 6clicks platform to perform comprehensive audits and assessments, demonstrate their compliance with ISO 42001 and other standards, and enhance their risk management strategy.

Empower your organization with robust risk and compliance management

Optimize your risk and compliance management processes with 6clicks and effectively navigate the growing AI landscape. Discover why organizations and managed service providers choose 6clicks as their preferred GRC platform.

Written by Louis Strauss

Louis is the Co-founder and Chief Product Marketing Officer (CPMO) at 6clicks, where he spearheads collaboration among product, marketing, engineering, and sales teams. With a deep-seated passion for innovation, Louis drives the development of elegant AI-powered solutions tailored to address the intricate challenges CISOs, InfoSec teams, and GRC professionals face. Beyond cyber GRC, Louis enjoys reading and spending time with his friends and family.